As part of its ongoing commitment to protecting young users, Meta has expanded its Instagram teen DM safety features. The new updates are designed to make it easier for teens to recognize potential threats, report inappropriate behavior, and manage their interactions safely and confidently.

These changes are especially vital in an age where social media serves as a primary means of communication for millions of teens. Let’s explore how Meta is evolving Instagram to be a safer space for youth.

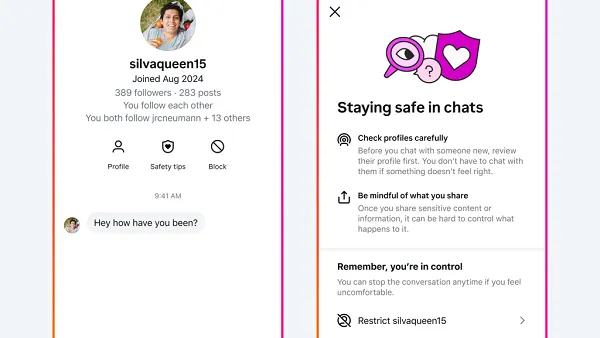

In-Chat “Safety Tips” and Quick Blocking Options

The most noticeable update is a new “Safety Tips” prompt, now embedded directly within Instagram chats. This contextual message offers real-time advice on how to identify suspicious behavior, such as scam attempts or inappropriate messages from strangers.

What makes this update effective is that it appears during actual chat conversations, nudging teens to consider safety while they’re actively engaging. With one tap, users can access detailed guidance on how to spot red flags, avoid sharing personal details, and take preventive action.

Meta has also simplified the response process by embedding a quick “Block” button directly in the chat, enabling immediate action without needing to navigate to a user’s profile. This makes blocking quicker, reducing hesitation or confusion about how to handle an uncomfortable situation.

Account Creation Date for Greater Transparency

Instagram now includes account creation dates—specifically, the month and year a user joined the platform—in new chat requests. This subtle yet powerful addition gives teens valuable context when approached by unfamiliar accounts.

For example, if a seemingly random message comes from a newly created account, teens may recognize the potential for spam, impersonation, or other malicious behavior. This builds a layer of informed decision-making into their social interactions, helping reduce risks.

Combined “Block and Report” Function for Fast Action

Previously, users had to block and report accounts separately, which discouraged some from completing both steps. To streamline the process, Meta now offers a single, combined “Block and Report” button inside DMs.

This is more than just a usability upgrade. According to Meta:

“While we’ve always encouraged people to both block and report, this new combined option will make this process easier, and help make sure potentially violating accounts are reported to us, so we can review and take action.”

By simplifying reporting, Meta hopes to increase the volume of harmful accounts flagged for moderation, which can lead to faster enforcement and platform-wide protection for more users.

Enhanced Safeguards for Adult-Managed Teen Accounts

One of the more serious risks Meta continues to address is the misuse of adult-managed accounts featuring children, particularly those under 13. These types of accounts often belong to influencers or content creators where parents manage social profiles for their kids.

Meta has now applied extra safety restrictions to these accounts, including:

- Removal from recommendations shown to suspicious adult users

- Stricter filtering of DMs and comments using nudity detection and keyword blocks

- Visibility limits on who can discover these accounts, especially those previously blocked by teens

These changes aim to reduce visibility to potential predators who might target child-centered content. It’s a strong step toward isolating problematic behavior before it can escalate.

Enforcement Results Reveal Ongoing Issues

Meta’s latest transparency data highlights just how necessary these protections are. Earlier this year, internal teams removed:

- 135,000 Instagram accounts for posting sexualized comments or requesting inappropriate images from teen or child-managed profiles

- An additional 500,000 related accounts across Facebook and Instagram that were linked to those behaviors

This massive scale of enforcement underlines the seriousness of the problem. While Meta’s tools are improving, these figures show that platform-wide safety still requires vigilant monitoring and continuous upgrades.

Positive Response from Teens to Safety Prompts

Meta’s safety updates aren’t just theoretical—they’re already making an impact. The company reported that in June:

- Over 2 million actions were taken by teens after seeing in-app safety notices. This includes:

- Nearly 1 million blocks

- Around 1 million reports of suspicious activity

- A “Location Notice” appeared in chats over 1 million times, alerting teens when they were talking to someone based in another country. About 10% of users tapped to learn more about how to protect themselves.

These actions show that teens are engaging with safety tools when made easily accessible and relevant.

Nudity Protection Feature Reduces Exposure to Inappropriate Content

Meta’s nudity protection feature—which automatically blurs suspected explicit images in DMs—has also shown strong results:

- 99% of users, including teens, have chosen to keep the feature turned on

- In June, more than 40% of blurred images were never opened, which significantly reduced exposure to harmful or explicit content

This passive safety mechanism is proving highly effective in curbing unwanted content without interfering with normal use.

Why These Instagram Teen DM Safety Tools Matter

Meta’s latest moves demonstrate a clear shift toward building safety into every interaction, especially for younger users. By prioritizing easy access to reporting, contextual tips, and visibility filters, Meta empowers teens to navigate Instagram more securely.

This also helps parents, educators, and content creators build confidence in the platform’s ability to protect younger audiences. While no digital space is completely risk-free, the combination of proactive safety education, stronger filters, and improved enforcement puts Meta ahead of many competitors in this regard.

Final Thoughts

The Instagram teen DM safety updates show that Meta is listening to concerns from parents, regulators, and users. By making safety more visible, actionable, and part of the daily user experience, Meta is creating a healthier, safer social environment for teens.

These improvements mark another step in Meta’s broader effort to reshape how platforms protect their most vulnerable users—not just in response to issues, but by preventing harm before it happens.