Meta’s Shift to AI-Powered Enforcement

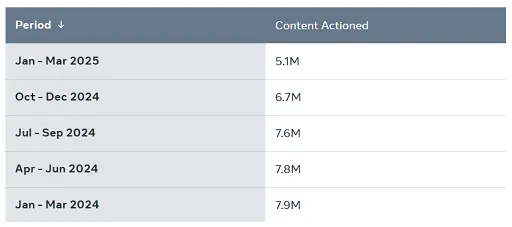

Meta (owner of Facebook and Instagram) is progressively shifting major policy review processes—like rule violation detection, algorithm changes, and product risk assessments—to artificial intelligence. Internal documents showed AI systems may soon handle up to 90% of these reviews, with humans overseeing only the highest-risk scenarios.

More Accuracy, More Risk

Meta claims AI use has halved enforcement errors such as wrongful content removal. While humans now oversee borderline or complicated cases, automating the bulk of decisions may allow harmful or rule-breaking content to slip through the cracks.

Why It Matters

Relying heavily on automated moderation affects billions. Brands see changes in ad delivery and engagement. Meanwhile, users depend on AI to shape what they see—and users wonder if it’s fair and accurate. Trust becomes a central concern, especially when reviewing nuanced content.

How Meta Manages Risk

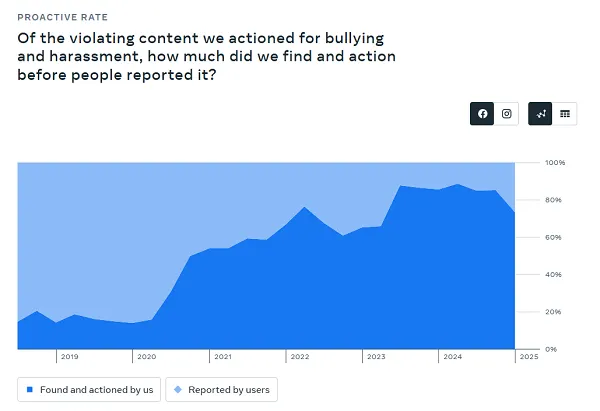

Meta states it uses AI to assess content context, virality, and potential harm before selecting cases for human review. This automates low-to-medium-risk decisions while reserving human judgment for complex cases listed as high-risk.

Criticisms and Concerns

Regulatory bodies and watchdog groups have warned that automated systems might miss sensitive content, especially from marginalized groups. For instance:

“It’s gravely concerning… some flagged content won’t get human review at all,” ⋯ “led to a toxic environment for marginalized groups”

While Meta says overall rule-breaking content is rare, many worry that baking AI too deeply into content policing could amplify biases or miss subtle yet impactful violations.

The Human–AI Balance

Meta intends to use AI to improve speed, scale, and cost-efficiency. But if automation misses content, human oversight becomes essential. Finding the right mix—automating routine cases while preserving nuanced human judgment—remains the key challenge.

What Comes Next

- Expansion of AI reviews: More content categories and decisions will fall under AI control.

- Heightened oversight: Regulators and advocacy groups will likely demand transparency, fairness, and bias checks.

- Industry ripple effect: As Meta doubles down on AI, other platforms may follow suit—or push for stronger human oversight.